PyCaret 2.1 Feature Summary

PyCaret 2.1 is here — What’s new?

We are excited to announce PyCaret 2.1 — update for the month of Aug 2020.

PyCaret is an open-source, low-code machine learning library in Python that automates the machine learning workflow. It is an end-to-end machine learning and model management tool that speeds up the machine learning experiment cycle and makes you 10x more productive.

In comparison with the other open-source machine learning libraries, PyCaret is an alternate low-code library that can be used to replace hundreds of lines of code with few words only. This makes experiments exponentially fast and efficient.

If you haven’t heard or used PyCaret before, please see our previous announcement to get started quickly.

Installing PyCaret

Installing PyCaret is very easy and takes only a few minutes. We strongly recommend using virtual environment to avoid potential conflict with other libraries. See the following example code to create a ***conda environment ***and install pycaret within that conda environment:

PyCaret 2.1 Feature Summary

👉 Hyperparameter Tuning on GPU

In PyCaret 2.0 we have announced GPU-enabled training for certain algorithms (XGBoost, LightGBM and Catboost). What’s new in 2.1 is now you can also tune the hyperparameters of those models on GPU.

No additional parameter needed inside **tune_model **function as it automatically inherits the tree_method from xgboost instance created using the **create_model **function. If you are interested in little comparison, here it is:

100,000 rows with 88 features in a Multiclass problem with 8 classes

👉 Model Deployment

Since the first release of PyCaret in April 2020, you can deploy trained models on AWS simply by using the **deploy_model **from ****your Notebook. In the recent release, we have added functionalities to support deployment on GCP as well as Microsoft Azure.

Microsoft Azure

To deploy a model on Microsoft Azure, environment variables for connection string must be set. The connection string can be obtained from the ‘Access Keys’ of your storage account in Azure.

Once you have copied the connection string, you can set it as an environment variable. See example below:

BOOM! That’s it. Just by using one line of code**, **your entire machine learning pipeline is now shipped on the container in Microsoft Azure. You can access that using the load_model function.

Google Cloud Platform

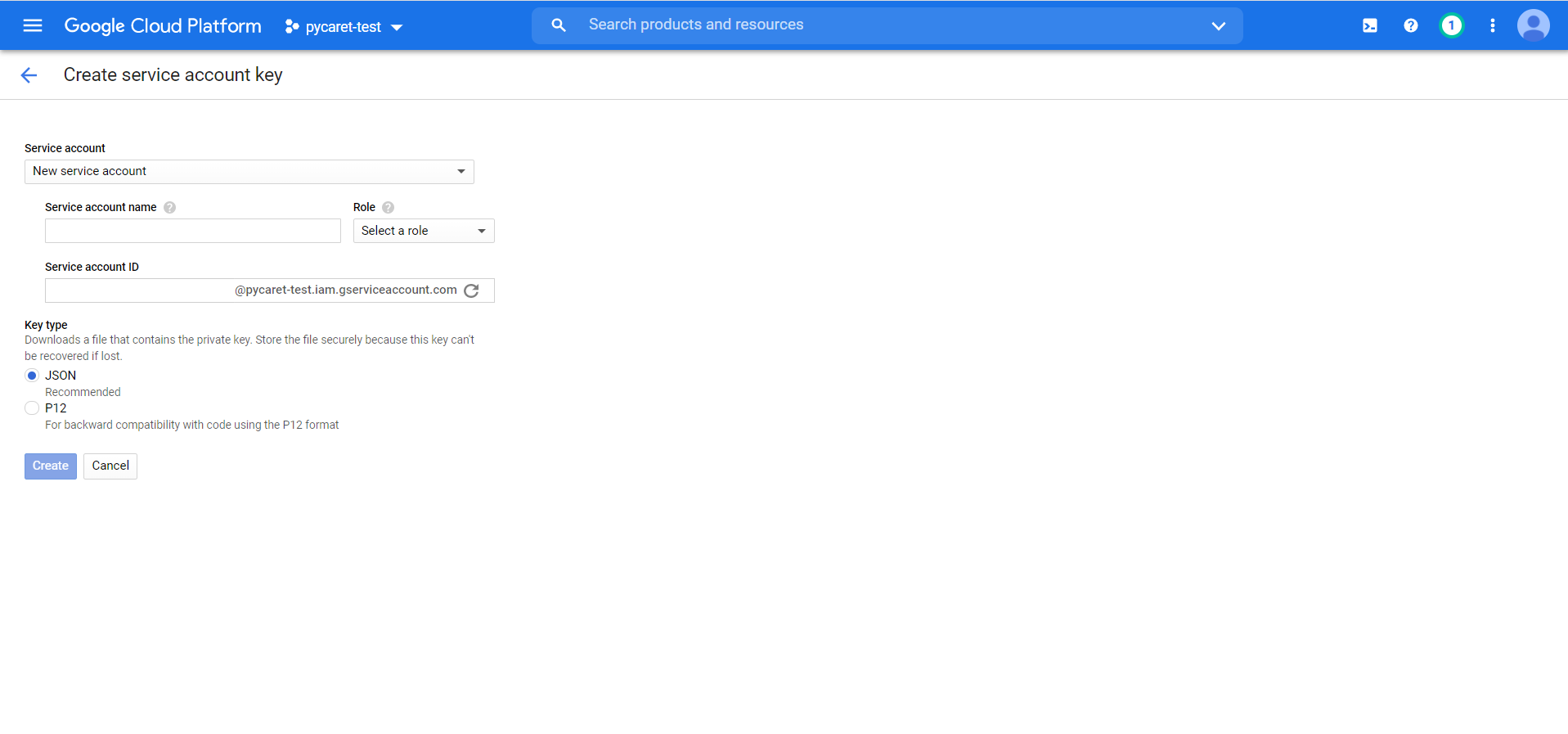

To deploy a model on Google Cloud Platform (GCP), you must create a project first either using a command line or GCP console. Once the project is created, you must create a service account and download the service account key as a JSON file, which is then used to set the environment variable.

To learn more about creating a service account, read the official documentation. Once you have created a service account and downloaded the JSON file from your GCP console you are ready for deployment.

Model uploaded. You can now access the model from the GCP bucket using the load_model function.

👉 MLFlow Deployment

In addition to using PyCaret’s native deployment functionalities, you can now also use all the MLFlow deployment capabilities. To use those, you must log your experiment using the log_experiment parameter in the **setup **function.

Now open https://localhost:5000 on your favorite browser.

You can see the details of run by clicking the “Start Time” shown on the left of “Run Name”. What you see inside is all the hyperparameters and scoring metrics of a trained model and if you scroll down a little, all the artifacts are shown as well (see below).

A trained model along with other metadata files are stored under the directory “/model”. MLFlow follows a standard format for packaging machine learning models that can be used in a variety of downstream tools — for example, real-time serving through a REST API or batch inference on Apache Spark. If you want you can serve this model locally you can do that by using MLFlow command line.

You can then send the request to model using CURL to get the predictions.

(Note: This functionality of MLFlow is not supported on Windows OS yet).

MLFlow also provide integration with AWS Sagemaker and Azure Machine Learning Service. You can train models locally in a Docker container with SageMaker compatible environment or remotely on SageMaker. To deploy remotely to SageMaker you need to set up your environment and AWS user account.

Example workflow using the MLflow CLI

To learn more about all deployment capabilities of MLFlow, click here.

👉 MLFlow Model Registry

The MLflow Model Registry component is a centralized model store, set of APIs, and UI, to collaboratively manage the full lifecycle of an MLflow Model. It provides model lineage (which MLflow experiment and run produced the model), model versioning, stage transitions (for example from staging to production), and annotations.

If running your own MLflow server, you must use a database-backed backend store in order to access the model registry. Click here for more information. However, if you are using Databricks or any of the managed Databricks services such as Azure Databricks, you don’t need to worry about setting up anything. It comes with all the bells and whistles you would ever need.

👉 High-Resolution Plotting

This is not ground-breaking but indeed a very useful addition for people using PyCaret for research and publications. The plot_model now has an additional parameter called “scale” through which you can control the resolution and generate high quality plot for your publications.

👉 User-Defined Loss Function

This is one of the most requested feature ever since release of the first version. Allowing to tune hyperparameters of a model using custom / user-defined function gives immense flexibility to data scientists. It is now possible to use user-defined custom loss functions using **custom_scorer **parameter in the **tune_model **function.

👉 Feature Selection

Feature selection is a fundamental step in machine learning. You dispose of a bunch of features and you want to select only the relevant ones and to discard the others. The aim is simplifying the problem by removing unuseful features which would introduce unnecessary noise.

In PyCaret 2.1 we have introduced implementation of Boruta algorithm in Python (originally implemented in R). Boruta is a pretty smart algorithm dating back to 2010 designed to automatically perform feature selection on a dataset. To use this, you simply have to pass the **feature_selection_method **within the setup function.

To read more about Boruta algorithm, click here.

👉 Other Changes

blacklist and whitelist parameters in compare_models function is now renamed to exclude and include with no change in functionality.

To set the upper limit on training time in compare_models function, new parameter budget_time has been added.

PyCaret is now compatible with Pandas categorical datatype. Internally they are converted into object and are treated as the same way as object or bool is treated.

Numeric Imputation New method zero has been added in the numeric_imputation in the setup function. When method is set to zero, missing values are replaced with constant 0.

To make the output more human-readable, the Label column returned by predict_model function now returns the original value instead of encoded value.

To learn more about all the updates in PyCaret 2.1, please see the release notes.

There is no limit to what you can achieve using the lightweight workflow automation library in Python. If you find this useful, please do not forget to give us ⭐️ on our GitHub repo.

To hear more about PyCaret follow us on LinkedIn and Youtube.

Important Links

User Guide Documentation Official Tutorials Example Notebooks Other Resources

Want to learn about a specific module?

Click on the links below to see the documentation and working examples.

Classification Regression Clustering Anomaly Detection Natural Language Processing Association Rule Mining

Last updated

Was this helpful?