NLP Text Classification in Python using PyCaret

NLP Text-Classification in Python: PyCaret Approach Vs The Traditional Approach

A comparative analysis between The Traditional Approach and PyCaret Approach

by Prateek Baghel

I. Introduction

II. The NLP-Classification Problem

III. Traditional Approach

Stage 1. Data Setup and Preprocessing on the text data

Stage 2. Embedding on the processed text data

Stage 3. Model Building

Stage 4. Hyperparameter Tuning

IV. PyCaret Approach

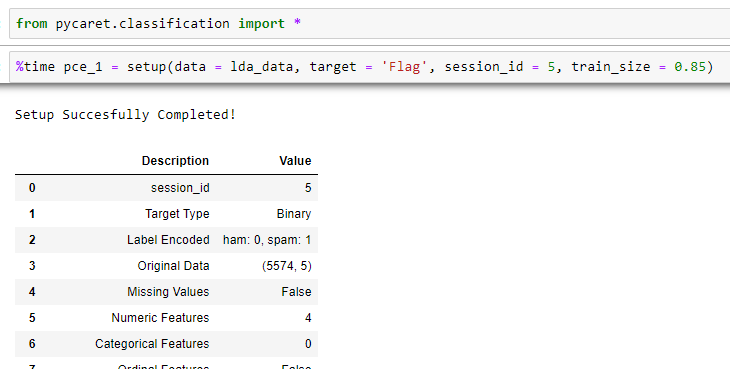

Stage 1. Data Setup and Preprocessing on the text data

Stage 2. Embedding on the processed text data

Stage 3. Model Building

Stage 4. Hyperparameter Tuning

V. Comparison of two methods

VI. Important Links

PreviousPredict Lead Score (the Right Way) Using PyCaretNextPredict Lead Score (the Right Way) Using PyCaret

Last updated

Was this helpful?