Machine Learning in KNIME with PyCaret

Machine Learning in KNIME with PyCaret

A step-by-step guide on training and scoring machine learning models in KNIME using PyCaret

PyCaret

PyCaret is an open-source, low-code machine learning library and end-to-end model management tool built-in Python for automating machine learning workflows. Its ease of use, simplicity, and ability to quickly and efficiently build and deploy end-to-end machine learning pipelines will amaze you.

PyCaret is an alternate low-code library that can replace hundreds of lines of code with few lines only. This makes the experiment cycle exponentially fast and efficient.

PyCaret is **simple and easy to use. **All the operations performed in PyCaret are sequentially stored in a Pipeline that is fully automated for **deployment. **Whether it’s imputing missing values, one-hot-encoding, transforming categorical data, feature engineering, or even hyperparameter tuning, PyCaret automates all of it. To learn more about PyCaret, watch this 1-minute video.

KNIME

KNIME Analytics Platform is open-source software for creating data science. Intuitive, open, and continuously integrating new developments, KNIME makes understanding data and designing data science workflows and reusable components accessible to everyone.

KNIME Analytics platform is one of the most popular open-source platforms used in data science to automate the data science process. KNIME has thousands of nodes in the node repository which allows you to drag and drop the nodes into the KNIME workbench. A collection of interrelated nodes creates a workflow that can be executed locally as well as can be executed in the KNIME web portal after deploying the workflow into the KNIME server.

Installation

For this tutorial, you will need two things. The first one being the KNIME Analytics Platform which is a desktop software that you can download from here. Second, you need Python.

The easiest way to get started with Python is to download Anaconda Distribution. To download, click here.

Once you have both the KNIME Analytics Platform and Python installed, you need to create a separate conda environment in which we will install PyCaret. Open the Anaconda prompt and run the following commands:

Now open the KNIME Analytics Platform and go to File → Install KNIME Extensions → KNIME & Extensions → and select KNIME Python Extension and install it.

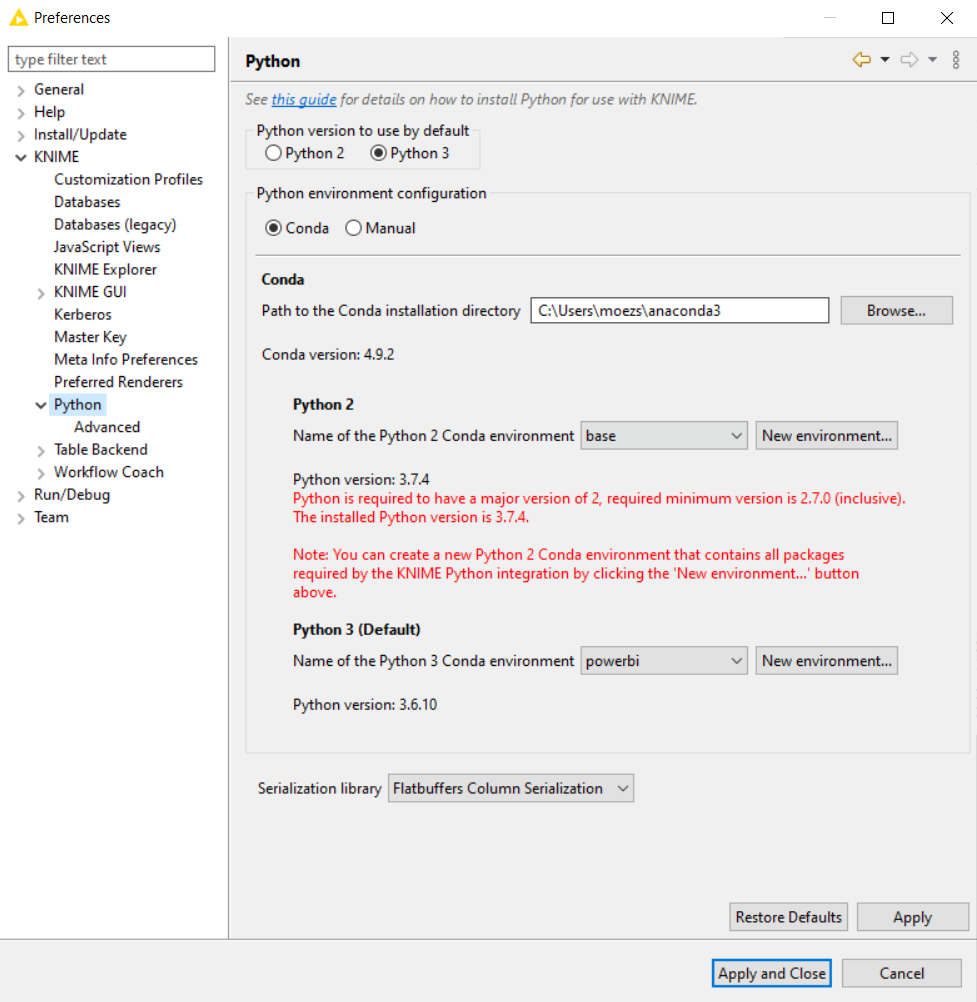

Once installation completes, go to File → Preferences → KNIME → Python and select your Python 3 environment. Notice that in my case the name of the environment is “powerbi”. If you have followed the commands above, the name of the environment is “knimeenv”.

👉We are ready now

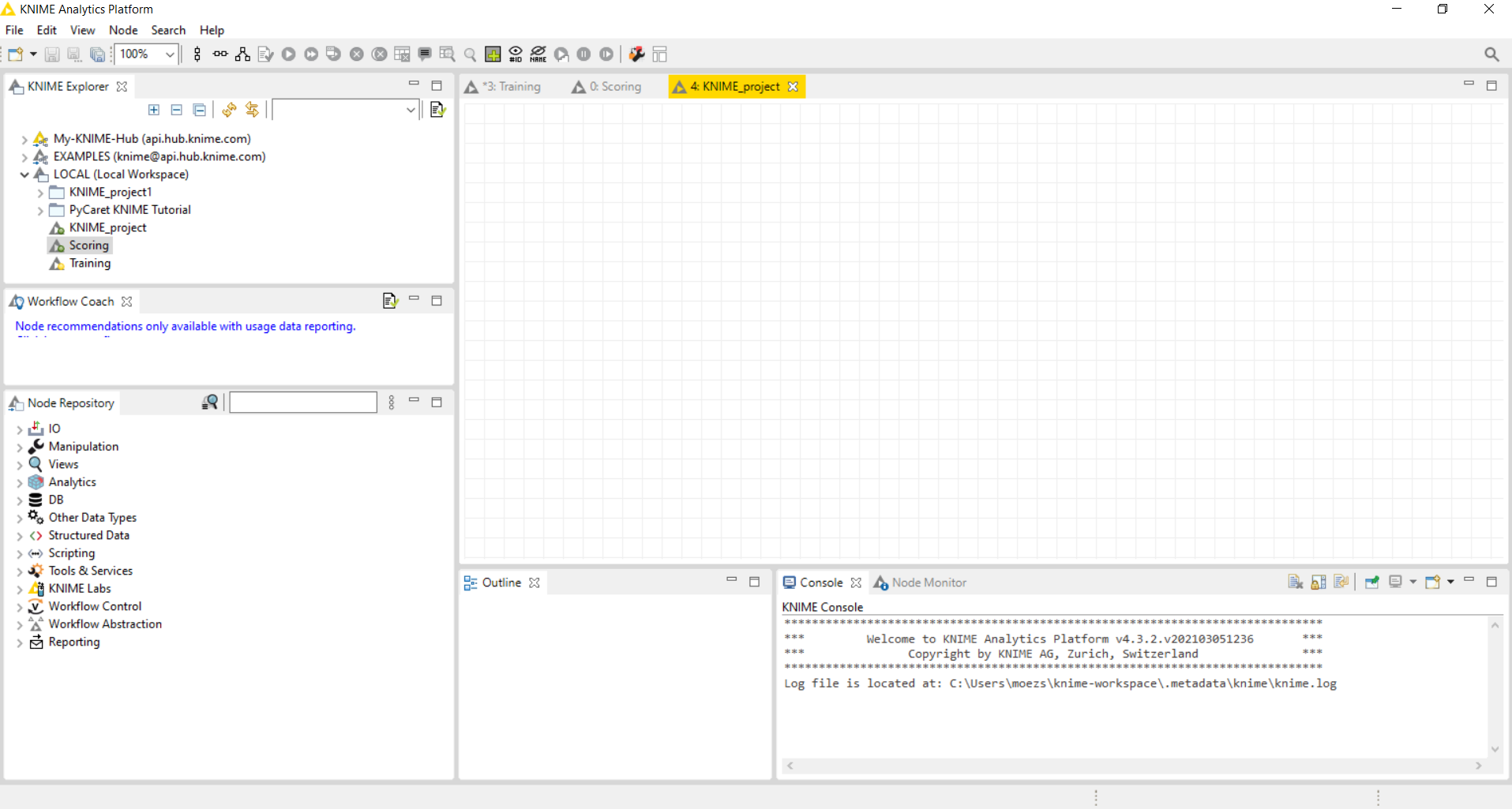

Click on “New KNIME Workflow” and a blank canvas will open.

On the left-hand side, there are tools that you can drag and drop on the canvas and execute the workflow by connecting each component to one another. All the actions in the repository on the left side are called Nodes.

Dataset

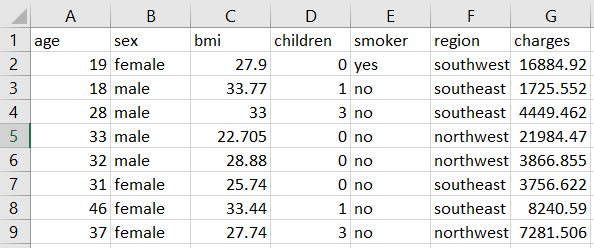

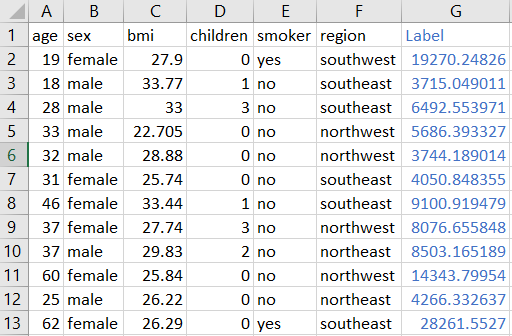

For this tutorial, I am using a regression dataset from PyCaret’s repository called ‘insurance’. You can download the data from here.

I will create two separate workflows. First one for model training and selection and the second one for scoring the new data using the trained pipeline.

👉 Model Training & Selection

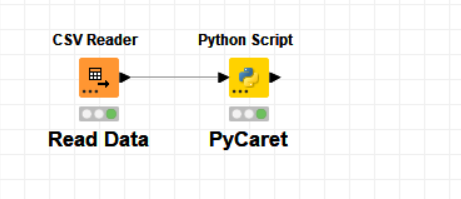

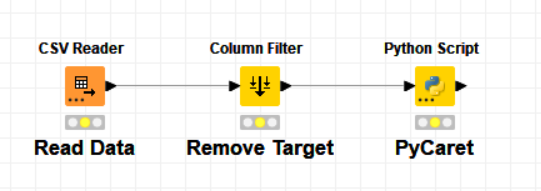

Let’s first read the CSV file from the CSV Reader node followed by a **Python Script. **Inside the Python script execute the following code:

This script is importing the regression module from pycaret, then initializing the setup function which automatically handles train_test_split and all the data preparation tasks such as missing value imputation, scaling, feature engineering, etc. compare_models trains and evaluates all the estimators using kfold cross-validation and returns the best model. pull function calls the model performance metric as a Dataframe which is then saved as results.csv on a local drive. Finally, save_model saves the entire transformation pipeline and model as a pickle file.

When you successfully execute this workflow, you will generate pipeline.pkl and results.csv file in the defined folder.

This is what results.csv contains:

These are the cross-validated metrics for all the models. The best model, in this case, is Gradient Boosting Regressor.

👉 Model Scoring

We can now use our pipeline.pkl to score on the new dataset. Since I do not have a separate dataset for ‘insurance.csv’, what I will do is drop the target column from the same file, just to demonstrate.

I have used the Column Filter node to remove the target column i.e. charges . In the Python script execute the following code:

When you successfully execute this workflow, it will generate predictions.csv.

I hope that you will appreciate the ease of use and simplicity in PyCaret. When used within an analytics platform like KNIME, it can save you many hours of coding and then maintaining that code in production. With less than 10 lines of code, I have trained and evaluated multiple models using PyCaret and deployed an ML Pipeline KNIME.

Coming Soon!

Next week I will take a deep dive and focus on more advanced functionalities of PyCaret that you can use within KNIME to enhance your machine learning workflows. If you would like to be notified automatically, you can follow me on Medium, LinkedIn, and Twitter.

There is no limit to what you can achieve using this lightweight workflow automation library in Python. If you find this useful, please do not forget to give us ⭐️ on our GitHub repository.

To hear more about PyCaret follow us on LinkedIn and Youtube.

Join us on our slack channel. Invite link here.

You may also be interested in:

Build your own AutoML in Power BI using PyCaret 2.0 Deploy Machine Learning Pipeline on Azure using Docker Deploy Machine Learning Pipeline on Google Kubernetes Engine Deploy Machine Learning Pipeline on AWS Fargate Build and deploy your first machine learning web app Deploy PyCaret and Streamlit app using AWS Fargate serverless Build and deploy machine learning web app using PyCaret and Streamlit Deploy Machine Learning App built using Streamlit and PyCaret on GKE

Important Links

Documentation Blog GitHub StackOverflow Install PyCaret Notebook Tutorials Contribute in PyCaret

Want to learn about a specific module?

Click on the links below to see the documentation and working examples.

Classification Regression Clustering Anomaly Detection Natural Language Processing Association Rule Mining

More PyCaret related tutorials:

Easy MLOps with PyCaret + MLflow A beginner-friendly, step-by-step tutorial on integrating MLOps in your Machine Learning experiments using PyCarettowardsdatascience.com Write and train your own custom machine learning models using PyCaret towardsdatascience.com Build with PyCaret, Deploy with FastAPI *A step-by-step, beginner-friendly tutorial on how to build an end-to-end Machine Learning Pipeline with PyCaret and…*towardsdatascience.com Time Series Anomaly Detection with PyCaret A step-by-step tutorial on unsupervised anomaly detection for time series data using PyCarettowardsdatascience.com Supercharge your Machine Learning Experiments with PyCaret and Gradio A step-by-step tutorial to develop and interact with machine learning pipelines rapidlytowardsdatascience.com Multiple Time Series Forecasting with PyCaret A step-by-step tutorial on forecasting multiple time series using PyCarettowardsdatascience.com Time Series Forecasting with PyCaret Regression Module A step-by-step tutorial for time-series forecasting using PyCarettowardsdatascience.com 5 things you are doing wrong in PyCaret From the Creator of PyCarettowardsdatascience.com GitHub is the best AutoML you will ever need A step-by-step tutorial to build AutoML using PyCaret 2.0towardsdatascience.com Build your own AutoML in Power BI using PyCaret A step-by-step tutorial to build AutoML solution in Power BItowardsdatascience.com Deploy PyCaret and Streamlit app using AWS Fargate — serverless infrastructure *A step-by-step tutorial to containerize machine learning app and deploy it using AWS Fargate.*towardsdatascience.com Deploy Machine Learning App built using Streamlit and PyCaret on Google Kubernetes Engine A step-by-step beginner’s guide to containerize and deploy a Streamlit app on Google Kubernetes Enginetowardsdatascience.com Build and deploy machine learning web app using PyCaret and Streamlit A beginner’s guide to deploying a machine learning app on Heroku PaaStowardsdatascience.com Deploy Machine Learning Pipeline on AWS Fargate A beginner’s guide to containerize and deploy machine learning pipeline serverless on AWS Fargatetowardsdatascience.com Topic Modeling in Power BI using PyCaret A step-by-step tutorial for implementing Topic Model in Power BItowardsdatascience.com Deploy Machine Learning Pipeline on Google Kubernetes Engine A beginner’s guide to containerize and deploy machine learning pipeline on Google Kubernetes Enginetowardsdatascience.com How to implement Clustering in Power BI using PyCaret A step-by-step tutorial for implementing Clustering in Power BItowardsdatascience.com Build your first Anomaly Detector in Power BI using PyCaret A step-by-step tutorial for implementing anomaly detection in Power BItowardsdatascience.com Deploy Machine Learning Pipeline on the cloud using Docker Container *A beginner’s guide to deploy machine learning pipelines on the cloud using PyCaret, Flask, Docker Container, and Azure Web…*towardsdatascience.com Build and deploy your first machine learning web app A beginner’s guide to train and deploy machine learning pipelines in Python using PyCarettowardsdatascience.com Machine Learning in Power BI using PyCaret A step-by-step tutorial for implementing machine learning in Power BI within minutestowardsdatascience.com

Last updated

Was this helpful?