Write and train custom ML models using PyCaret

Write and train your own custom machine learning models using PyCaret

A step-by-step, beginner-friendly tutorial on how to write and train custom machine learning models in PyCaret

PyCaret

Installing PyCaret

👉 Let’s get started

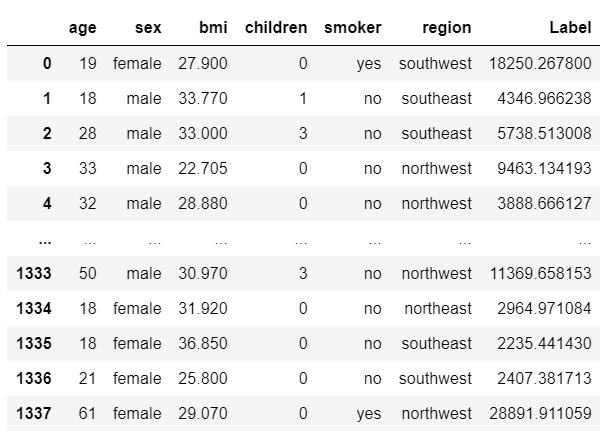

👉 Dataset

👉 Data Preparation

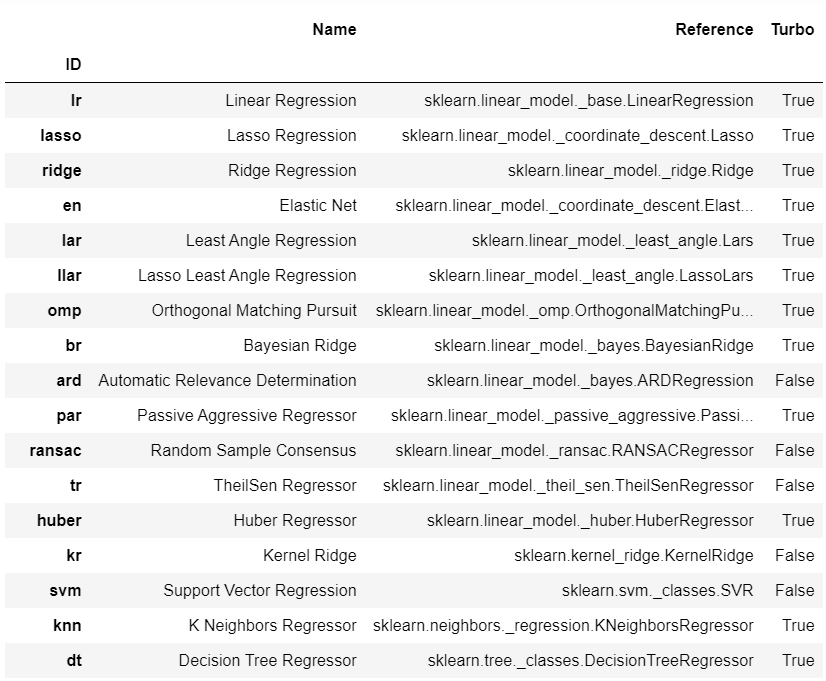

👉 Available Models

👉 Model Training & Selection

👉 Writing and Training Custom Model

👉 GPLearn Models

👉 NGBoost Models

👉 Writing Custom Class

Coming Soon!

You may also be interested in:

Important Links

Want to learn about a specific module?

PreviousTopic Modeling in Power BI using PyCaretNextBuild and deploy ML app with PyCaret and Streamlit

Last updated

Was this helpful?