Ship ML Models to SQL Server using PyCaret

Ship Machine Learning Model to Data Using PyCaret — Part II

Binary Classification

by Umar Farooque

My previous post **Machine Learning in SQL using PyCaret 1.0** provided details about integrating **PyCaret** with **SQL Server. In this article, I will provide step-by-step details on how to train and deploy a Supervised Machine Learning Classification model in SQL Server using **PyCaret 2.0 (PyCaret is a low-code ML library in Python).

Things to be covered in this article:

How to load data into SQL Server table

How to create and save a model in SQL Server table

How to make model predictions using the saved model and store results in the table

I. Import/Load Data

You will now have to import CSV file into a database using SQL Server Management Studio.

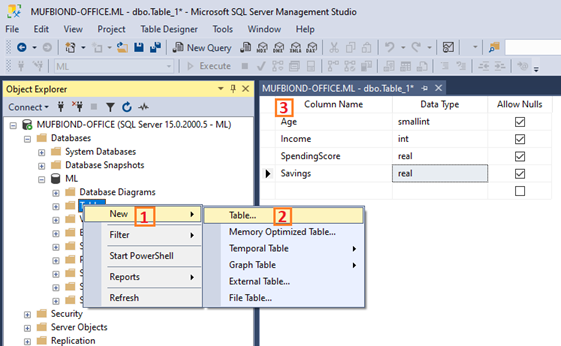

Create a table “cancer” in the database

Right-click the database and select Tasks -> Import Data

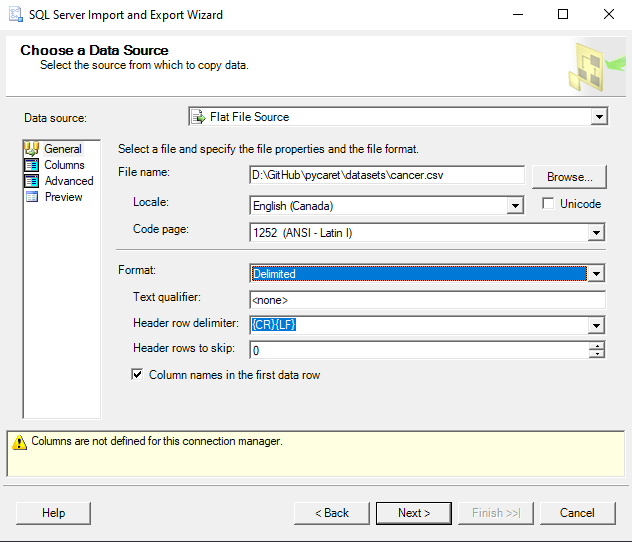

For Data Source, select Flat File Source. Then use the Browse button to select the CSV file. Spend some time configuring the data import before clicking the **Next **button.

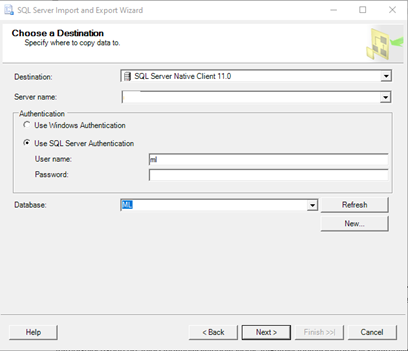

For Destination, select the correct database provider (e.g. SQL Server Native Client 11.0). Enter the Server name; check Use SQL Server Authentication, enter the Username, Password, and **Database **before clicking the **Next **button.

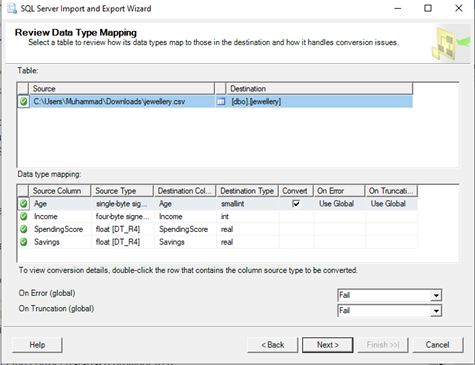

In the Select Source Tables and Views window, you can Edit Mappings before clicking the **Next **button.

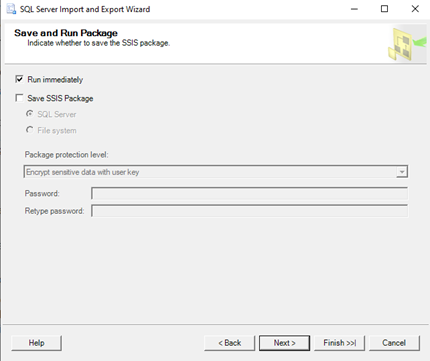

Check Run immediately and click the **Next **button

Click the Finish button to run the package

II. Create ML Model & Save in Database Table

**Classification is a type of supervised machine learning to predict the categorical class labels which are discrete and unordered. The module available in the **PyCaret package can be used for binary or multiclass problems.

In this example, we will be using a ‘Breast Cancer Dataset’. Creating and saving a model in a database table is a multi-step process. Let’s go by them step by step:

i. Create a stored procedure to create a trained model in this case an Extra Trees Classifier algorithm. The procedure will read data from the cancer table created in the previous step.

Below is the code used to create the procedure:

ii. Create a table that is required to store the trained model object

iii. Invoke stored procedure to create a model object and save into a database table

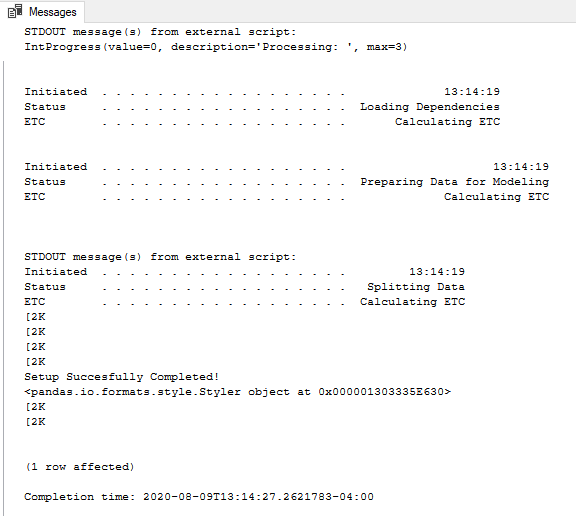

The output of this execution is:

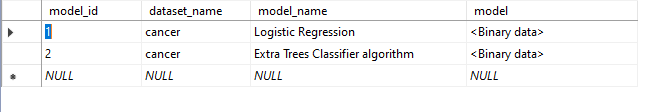

The view of table results after saving model

III. Running Predictions

The next step is to run the prediction for the test dataset based on the saved model. This is again a multi-step process. Let’s go through all the steps again.

i. Create a stored procedure that will use the test dataset to detect cancer for a test datapoint

Below is the code to create a database procedure:

ii. Create a table to save the predictions along with the dataset

iii. Call pycaret_predict_cancer procedure to save predictions result into a table

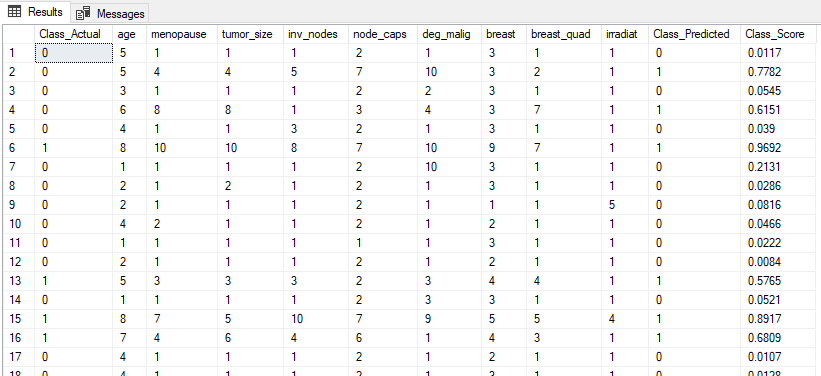

iv. Execute the SQL below to view the result of the prediction

IV. Conclusion

In this post, we learnt how to build a classification model using a PyCaret in SQL Server. Similarly, you can build and run other types of supervised and unsupervised ML models depending on the need of your business problem.

You can further check out the **PyCaret** website for documentation on other supervised and unsupervised experiments that can be implemented in a similar manner within SQL Server.

My future posts will be tutorials on exploring other supervised & unsupervised learning techniques using Python and PyCaret within a SQL Server.

V. Important Links

Last updated

Was this helpful?