Time Series Anomaly Detection with PyCaret

Time Series Anomaly Detection with PyCaret

A step-by-step tutorial on unsupervised anomaly detection for time series data using PyCaret

👉 Introduction

Learning Goals of this Tutorial

👉 PyCaret

👉 Installing PyCaret

👉 What is Anomaly Detection

👉 PyCaret Anomaly Detection Module

👉 Dataset

👉 Data Preparation

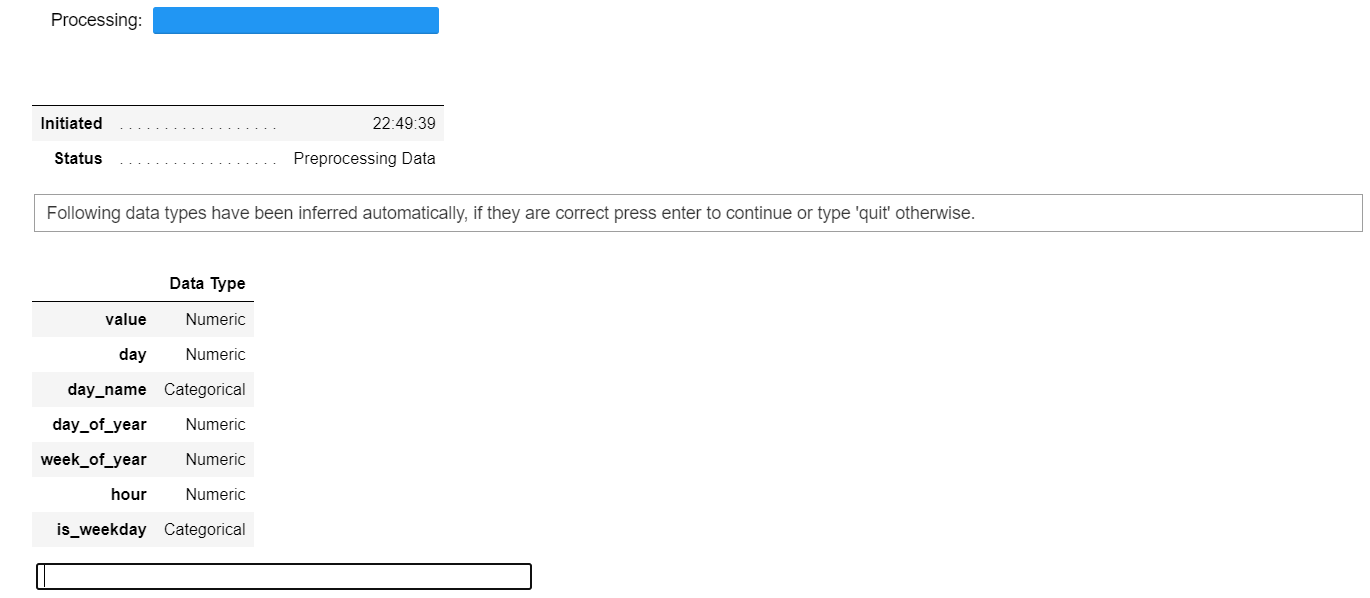

👉 Experiment Setup

👉 Model Training

Coming Soon!

You may also be interested in:

Important Links

Want to learn about a specific module?

Last updated

Was this helpful?