Predict Customer Churn using PyCaret

Predict Customer Churn (the right way) using PyCaret

A step-by-step guide on how to predict customer churn the right way using PyCaret that actually optimizes the business objective and improves ROI

Introduction

What is Customer Churn?

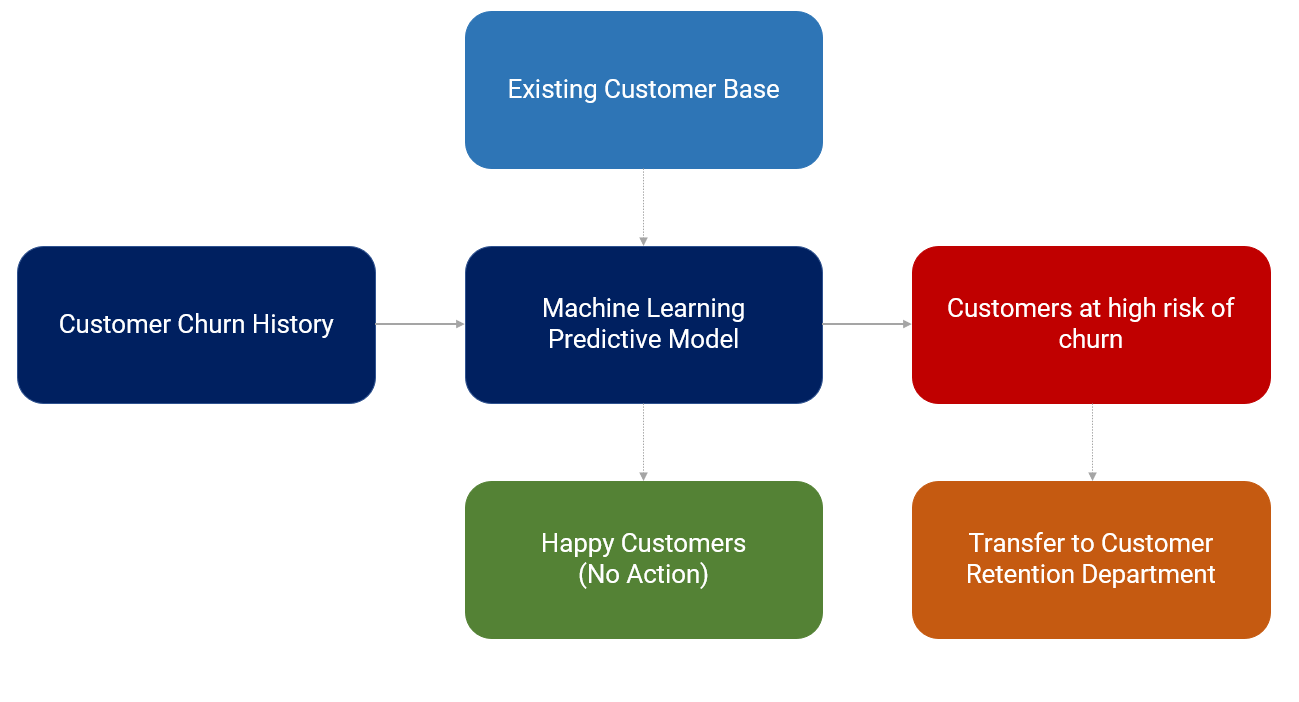

How is the Customer Churn machine learning model used in practice?

Customer Churn Model Workflow

Let’s get started with the practical example

PyCaret

Install PyCaret

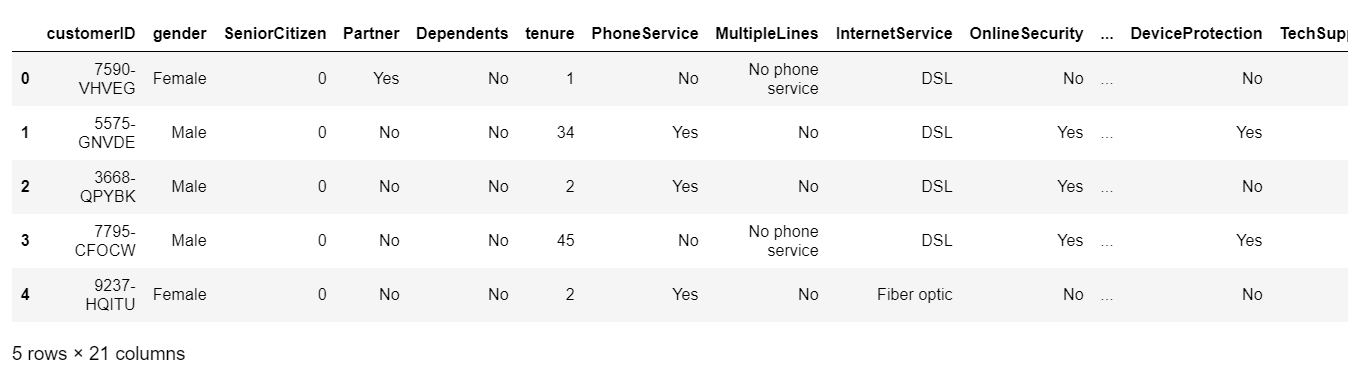

👉Dataset

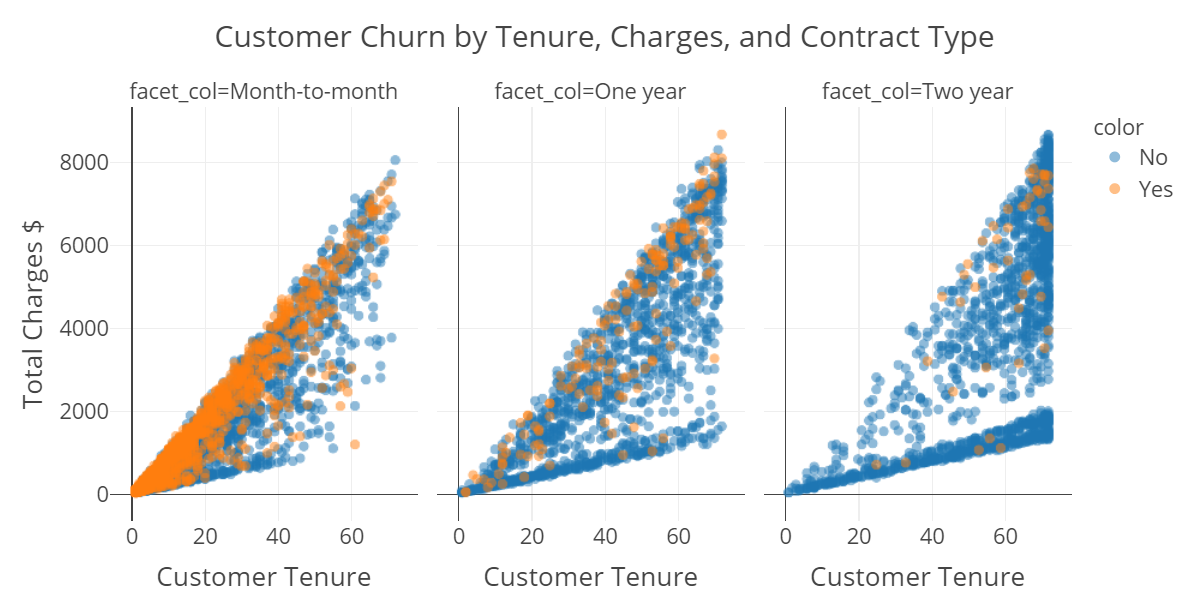

👉 Exploratory Data Analysis

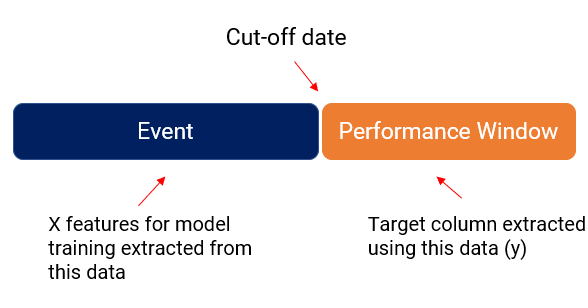

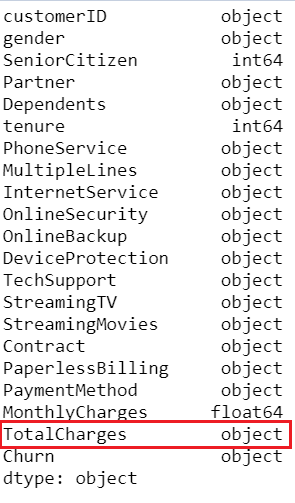

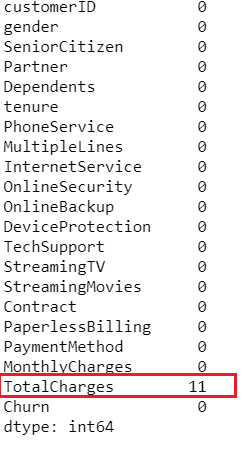

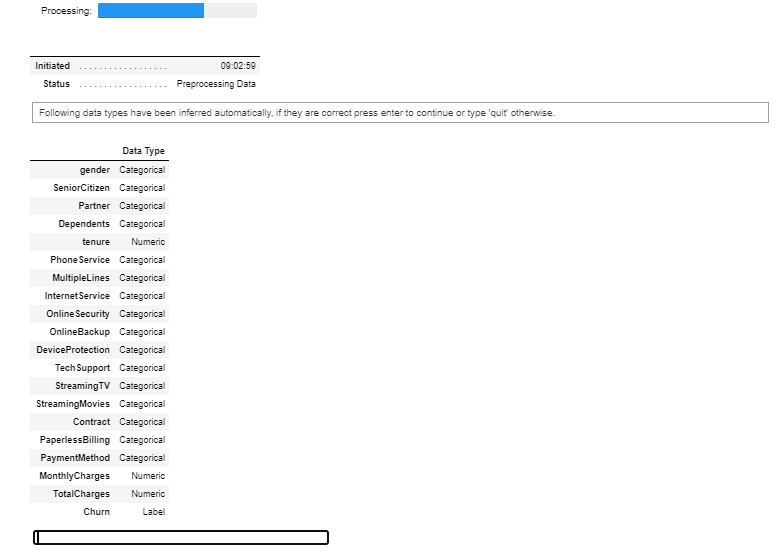

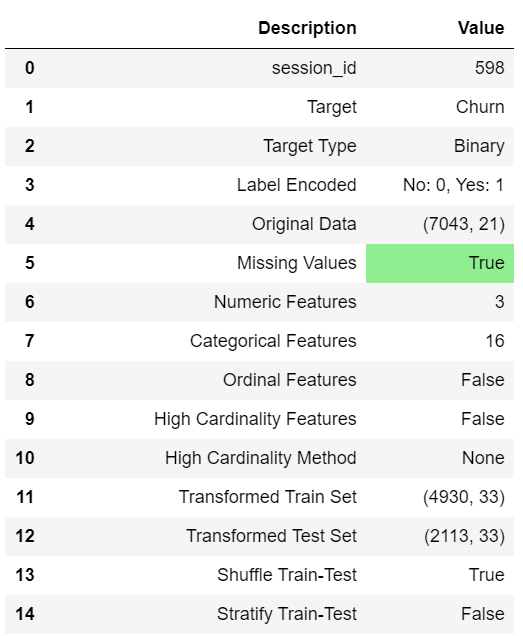

👉Data Preparation

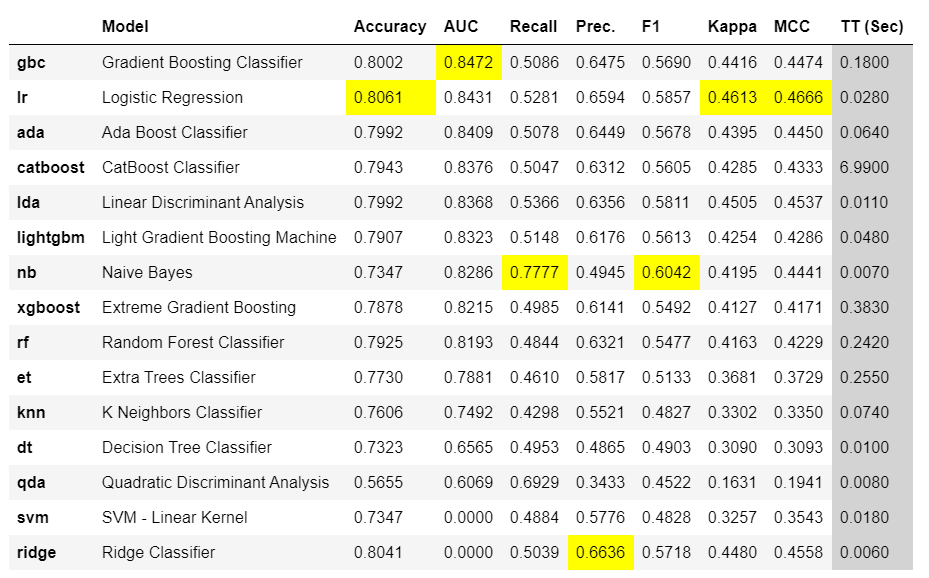

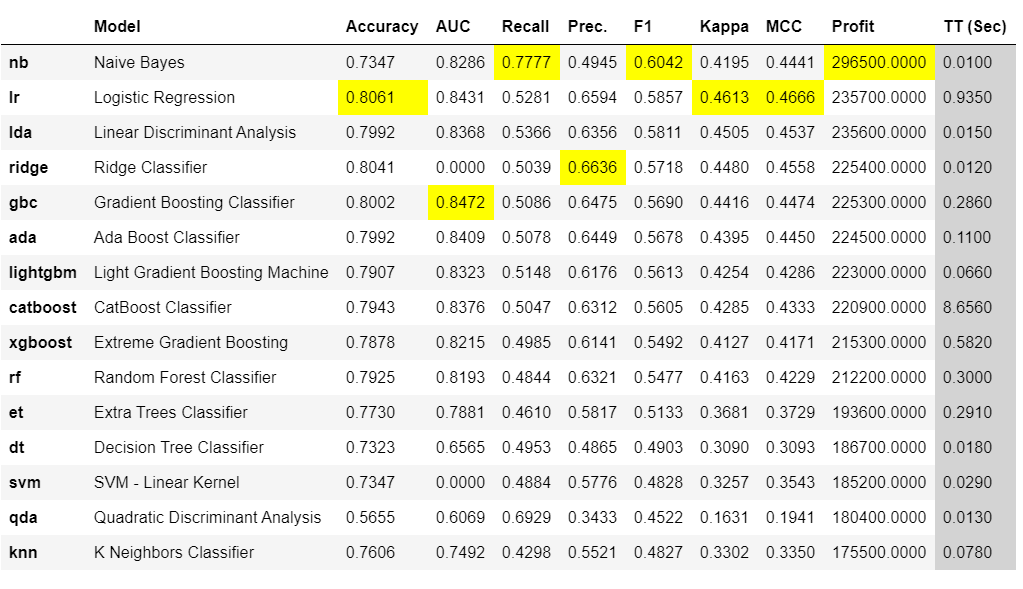

👉 Model Training & Selection

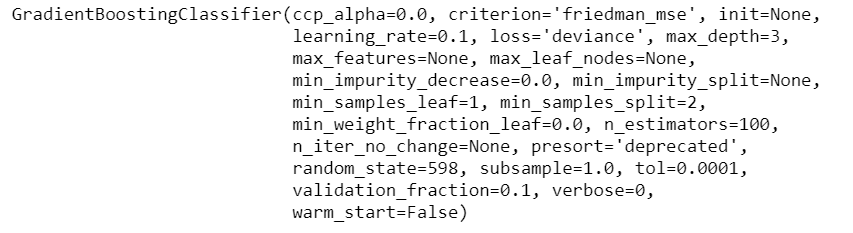

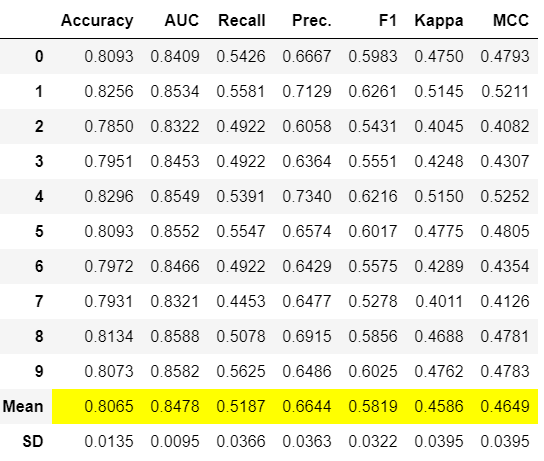

Hyperparameter Tuning

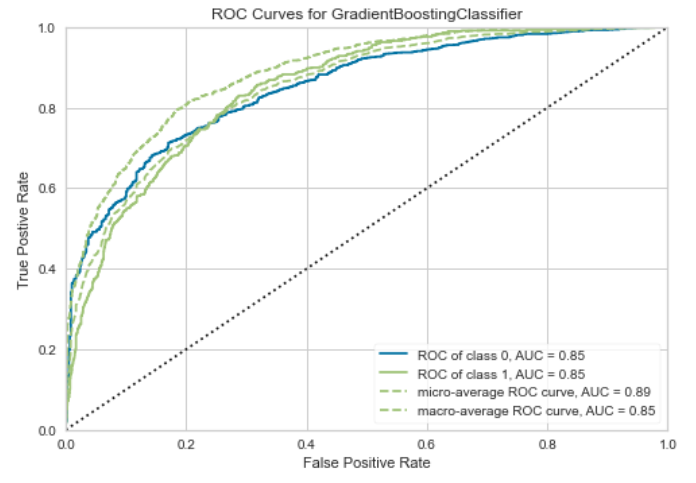

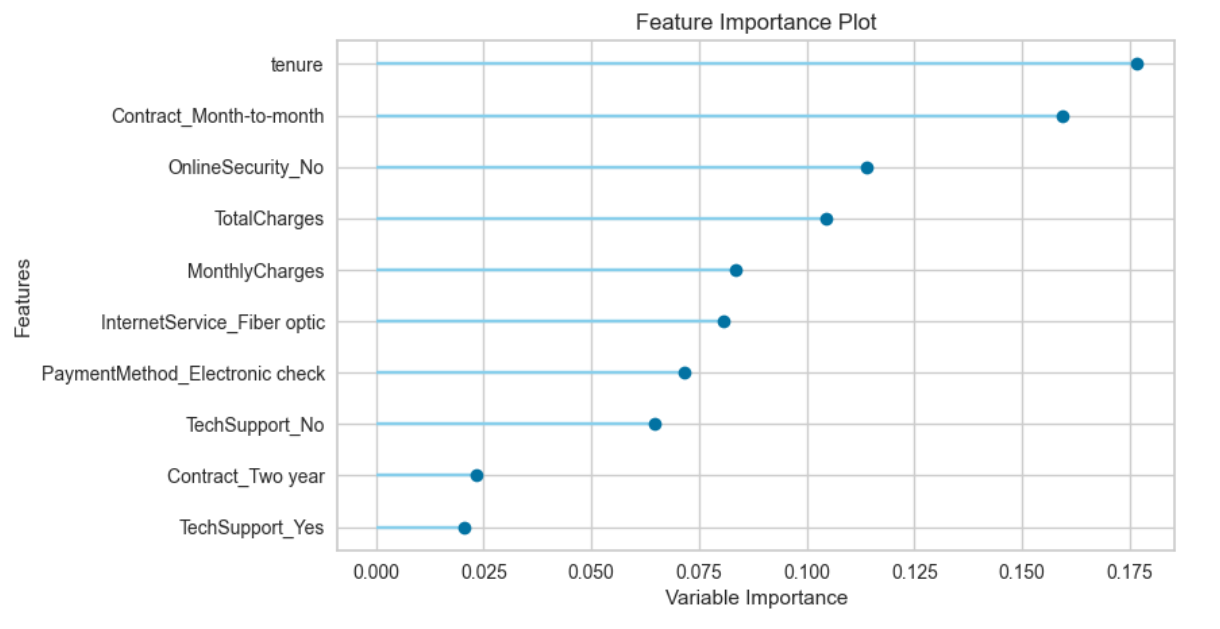

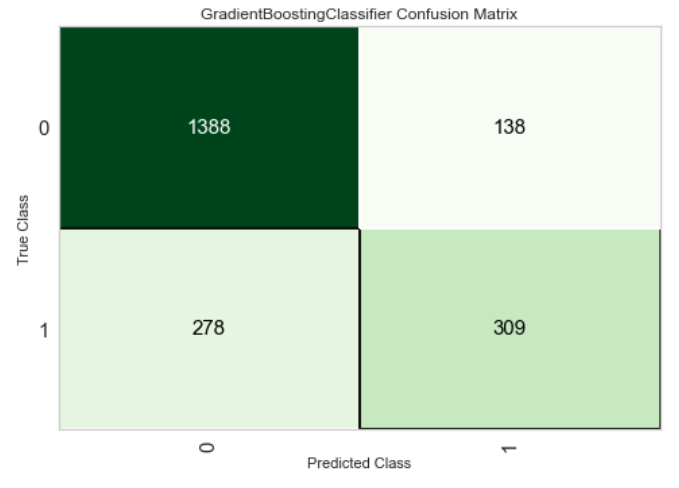

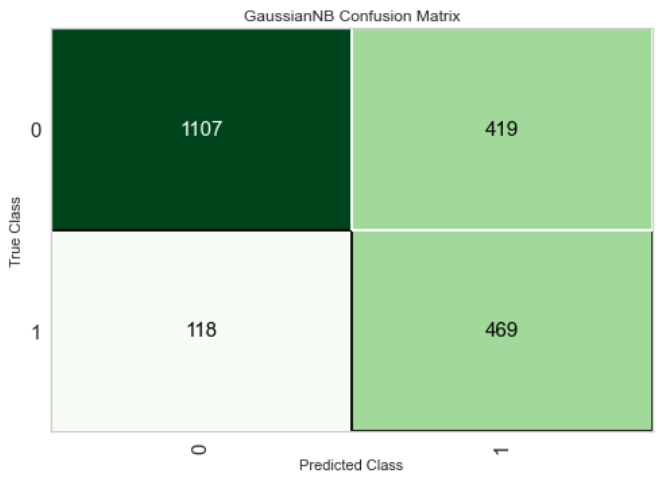

Model Analysis

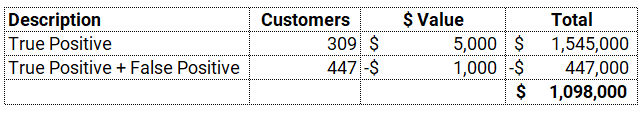

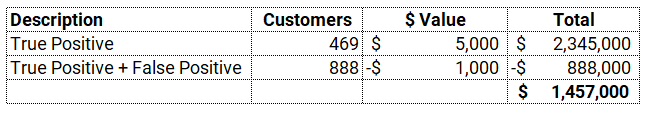

👉 Adding Custom Metric in PyCaret

***BAM! *We have just increased profit by ~$400,000 with a model that does 2% less in AUC than the best model. How does this happen? Well, for starters, AUC or any other out-of-the-box classification metric (Accuracy, Recall, Precision, F1, Kappa, etc.) is not a business-smart metric, so it does not take into account the risk and reward proposition. Adding a custom metric and using it for model selection or optimization is a great idea and right way to go with.

Important Links

More PyCaret related tutorials:

PreviousMultiple Time Series Forecasting with PyCaretNextPredict Lead Score (the Right Way) Using PyCaret

Last updated

Was this helpful?